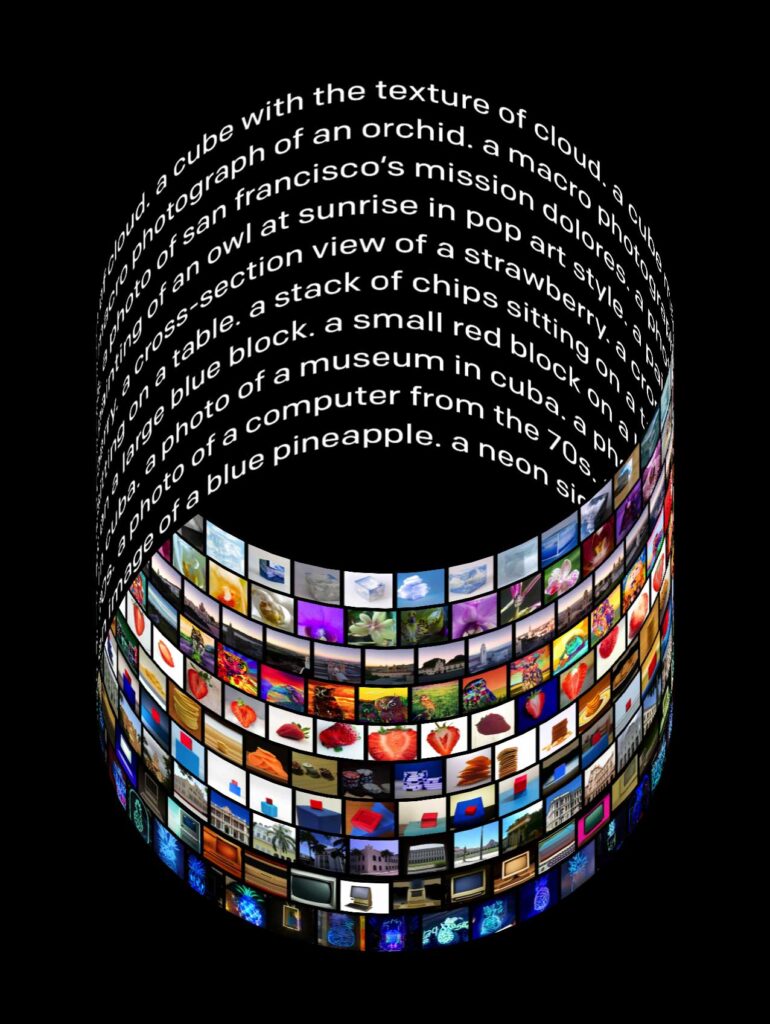

OpenAI, a research group based in the United States, is developing technology that allows people to create digital images by expressing what they wish to see.

According to the New York Times, the programme is called DALL-E, and it was inspired by both “WALL-E,” a 2008 animated film about an autonomous robot, and Salvador Dal, a surrealist painter.

The programme has not yet been made public by OpenAI, the artificial intelligence lab that Elon Musk helped build in 2015, but academics can sign up to examine it online. It will eventually be available in third-party apps, according to the business.

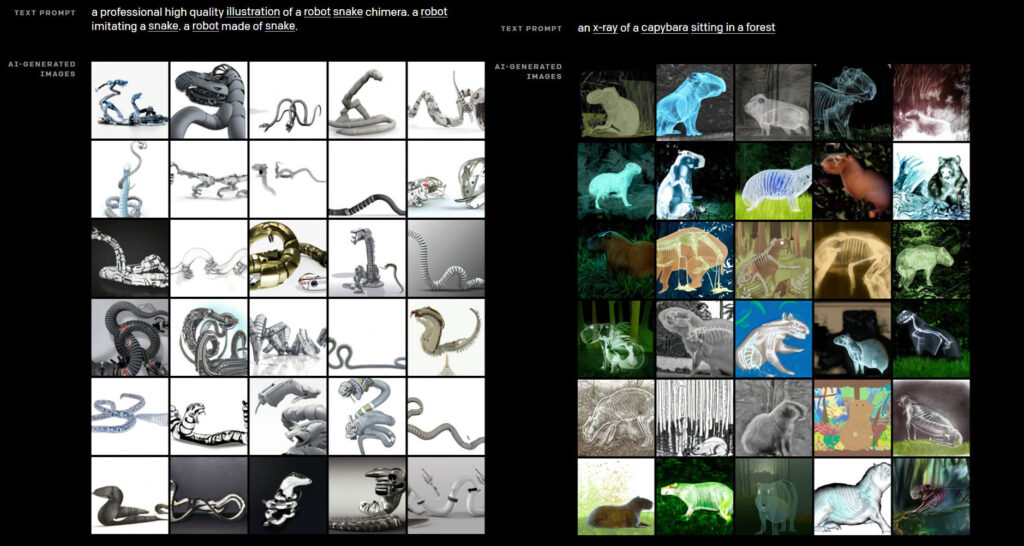

One of the researchers, Alex Nichol, typed in “a teapot in the shape of an avocado” to demonstrate how the programme works. A moss green avocado teapot was generated by the system in ten different ways. “DALL-E is great with avocados,” Nichol told the New York Times.

DALL-E is also capable of image editing. Nichol demonstrated this feature once more by utilising an image of a teddy bear underwater playing a trumpet and instead entering in a guitar. The system immediately produced an image of the identical bear holding a guitar in its fuzzy hands.

However, the technology is not without flaws. It did put the moon over the Eiffel Tower when asked to “put the Eiffel Tower on the moon.” Then, when Nichols asked for “a sand-filled living room,” it came up with an image that looked more like a building site.

According to the New York Times, seven researchers worked on the technique for two years. OpenAI intends to make it available to graphic artists in the future.

Microsoft has backed the company, having spent $1 billion in it in 2019.